The Executive Guide to Competitive Benchmarking in Answer Engines

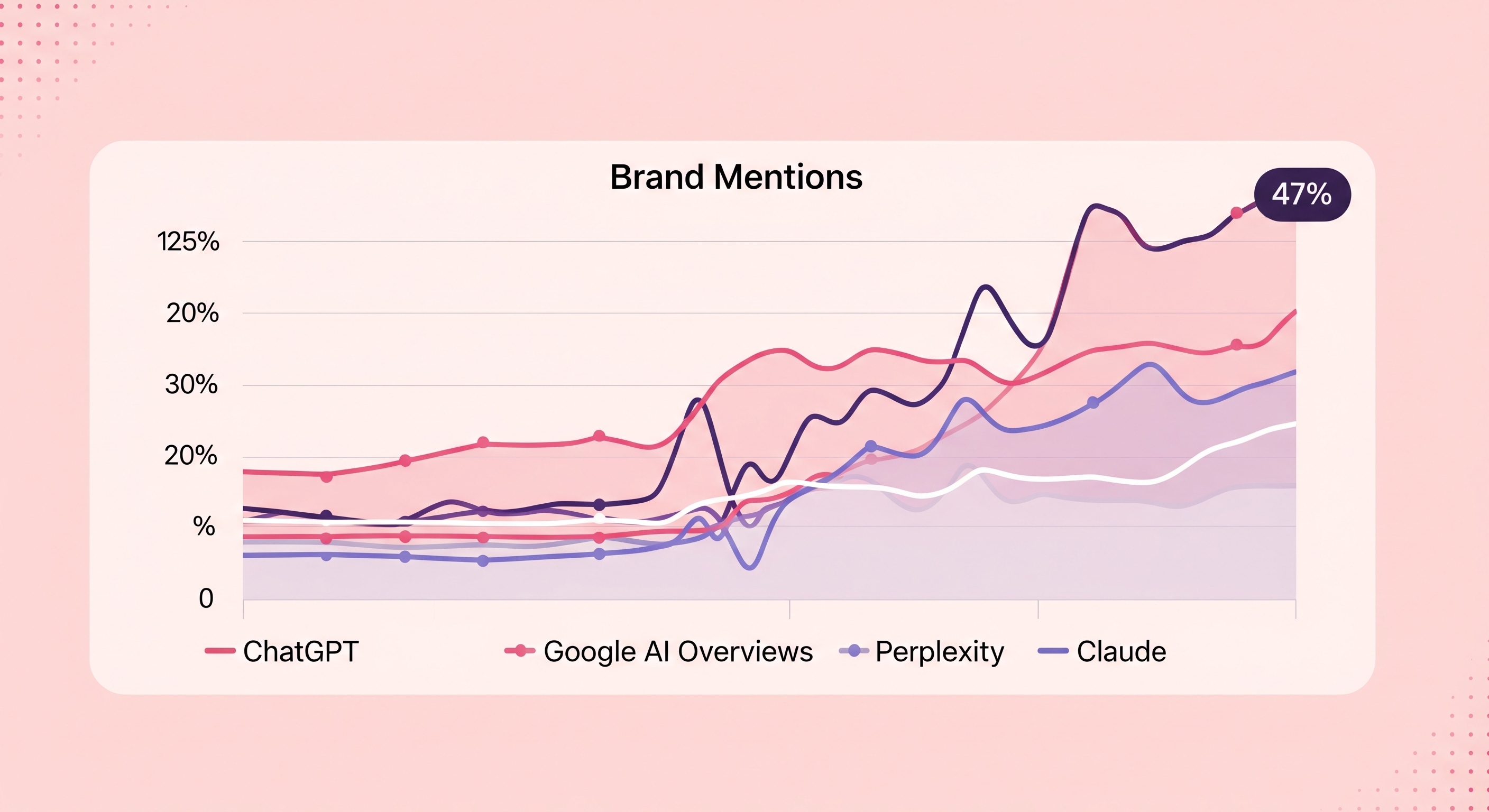

Benchmarking your brand in generative AI answer engines involves comparing AI share of voice (SOV), sentiment, topical relevance, and authority against 3–5 competitors. By identifying where competitors are cited but your brand is absent, you can target visibility gaps, optimize content for AI recognition, and strategically grow your AI share of voice across platforms like ChatGPT, Gemini, Perlexity, AI Mode, etc.

What Is Competitive Benchmarking in ChatGPT, Gemini, Perplexity, AI Mode, etc.?

Competitive benchmarking in generative AI answer engines, such as ChatGPT, Gemini, Perplexity, AI mode, etc. measures how often, where, and how accurately your brand is cited compared to competitors. Unlike traditional SEO rankings, this focuses on AI-generated answers, recommendations, and summaries across LLM-powered platforms.

By analyzing AI visibility across 3–5 competitors, brands can:

-

Identify missing citations

-

Improve brand accuracy and sentiment

-

Increase AI trust and authority

-

Grow AI Share of Voice (SOV)

Why Competitive Benchmarking in Generative AI Matters

Brands must adapt to AI-driven discovery. Unlike traditional SEO, AI visibility tracks how often your brand is cited in answer engines. Competitive benchmarking helps you:

- Identify visibility gaps where competitors dominate AI responses

- Optimize content for AI recognition, not just rankings

Protect brand reputation by monitoring sentiment and accuracy - Make data-driven decisions across SEO, PR, and content

For an in-depth framework, see our comprehensive AI visibility metrics guide.

Step-by-Step Benchmarking Framework

Step 1: Define Your Competitor Set

Choose 3–5 direct or aspirational competitors for your brand and/or product.

Step 2: Think of Your Persona

AI visibility depends heavily on prompt intent .Do you have already defined personas for your brand and/or product? Who is your product for? What is their motivation to buy your brand or product?

Step 3: Track Key Metrics

Measure visibility using:

| Metric | What It Measures | Why It Matters |

|---|---|---|

| Mentions in AI responses | Frequency your brand appears | Highlights AI visibility gaps |

| Sentiment & accuracy | Tone and correctness of mentions | Visibility only matters if it’s accurate and positive |

| Topical relevance | Alignment with key topics | Shows content coverage versus competitors |

| Contextual authority/ Brand Love | How often brand is cited as reliable | Determines brand credibility in AI answers, whether AI is confident in recommending your brand. |

Not all visibility is good visibility. Being cited inaccurately or negatively can damage your brand, making sentiment tracking critical.

Step 4: Run Multi-Model Audits

Do not benchmark only ChatGPT.

AI visibility varies significantly across:

- ChatGPT

- Gemini

- Perplexity

- AI Overviews / AI Mode

Use platforms like Seonali to run the same prompt set across multiple models and consolidate:

- Mentions

- Sentiment

- Source attribution

This creates a single source of truth for AI visibility.

Step 5: Analyze Visibility Gaps

Compare your metrics with competitors to identify:

-

Topics your competitors are cited for but you are not (branded vs non-branded prompts).

-

Improve your brand perception and brand reputation.

-

Opportunities to insert your brand as a credible source. Look from what sources AI takes information from your brand. Use this information to optimize your brand presence.

Step 6: Prioritize Strategic Actions

Use the analysis to guide AI content strategy:

| Action | Purpose | Example |

|---|---|---|

| Content creation | Fill topic gaps | Publish targeted guides or blogs |

| Asset optimization | Improve AI recognition | Enhance metadata and context |

| Strategic partnerships | Boost credibility | Collaborate with authoritative sources |

Focus on high-impact gaps first - topics with strong AI demand and low brand presence.

Conclusion

Benchmarking AI visibility is essential for strategic growth. By tracking metrics and identifying gaps, your brand can strengthen its presence in answer engines. Integrate these insights with the comprehensive AI visibility metrics framework for measurable, competitive advantage.

FAQ

What is competitive benchmarking in ChatGPT, Gemini, Perplexity, AI mode, etc.?

Competitive benchmarking in AI answer engines measures how often and how accurately a brand is cited in AI-generated responses compared to competitors across platforms like ChatGPT, Gemini, and Perplexity.

How is AI visibility different from SEO?

SEO focuses on rankings and clicks, while AI visibility tracks brand mentions, sentiment, and authority inside AI-generated answers - often before users visit any website.

What metrics matter most for AI benchmarking?

Key metrics include AI Share of Voice, sentiment accuracy, topical relevance, and contextual authority (how confidently AI recommends your brand).

How many competitors should I benchmark?

Most brands should benchmark against 3–5 competitors to balance strategic insight with actionable data.

Why should brands track multiple AI models?

Different models cite different sources and brands. Visibility and positive sentiment in ChatGPT does not guarantee visibility in Gemini or Perplexity.